LiDAR vs cameras.

I've seen many people sharing Mark Rober's video on LiDAR vs. camera-based "autonomous drive."

While I believe the ultimate combination at this point is radar + LiDAR + cameras, Tesla is doing a great job with just the camera-based system (go to YouTube and look for FSD videos from random people; it's incredible what progress they've achieved), but other companies are going with a full suite of sensors with vehicles that are not "supervised self-driving" - Waymo, Cruise (until recently) and Wayve.

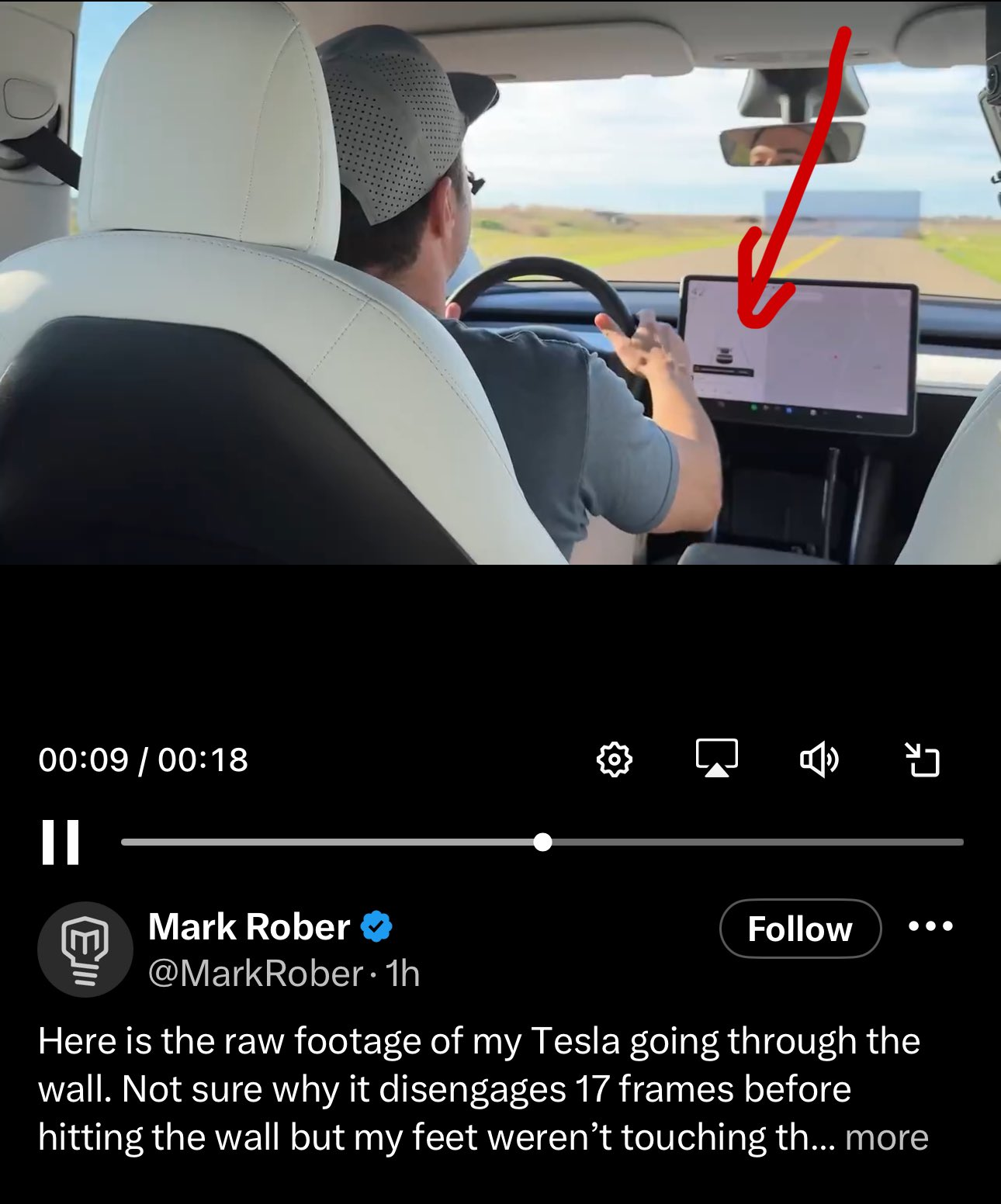

Now, bear with me - what Mark showed in the video WAS NOT FSD. Actually, when you check the video (or the part I'm talking about) second by second, you can see the Autopilot (which is Tesla's name for the ADAS system, so obstacle avoidance, adaptive cruise control, lane centering, etc.) is disengaged, so it had no chance to break. What should work here is obstacle avoidance (and it utterly failed.)

Details like this make the entire video doubtable.

But what about the real-life issues with the cameras?

I'm using Autopilot quite a lot on highways and motorways, as it makes driving more pleasant, and I don't need to control the speed all the time - it's why adaptive cruise control exists in the first place, right? But every now and then, I'm getting issues - phantom breaking in random spots, issues with engaging Autopilot in heavy rain, when there's direct sunlight at the cameras, or when it's super dark on the side of the road. I had none of these issues in my other cars (and had windscreen wipers that were usable; don't even get me started on how bad it works in Tesla)

As FSD is unavailable in Europe, I must rely on YouTube videos to support my claims, but here we go.

It's not bad. But it's not perfect, and if we want to have full self-driving cars on the roads (like Robotaxi or Verne), we need them to be way better than what you see in these videos.

So what's the problem here?

Computer vision has advanced dramatically over the past decade, and you can run many CV models with CNN (convolutional neural networks) like YOLO on consumer-grade GPU with good results. Here are three examples I've made on my PC two years ago just with the object detection:

What Tesla uses in their system is multiple times more advanced, but still has its limitations (cameras can get blinded by sunlight, need to be clean or not splashed at in the rain so that they may work in California or Texas, but let's try them in UK, Poland or Sweden).

LiDAR, on the other hand, uses spatial information. It perceives depth by emitting electromagnetic waves and measuring the time it takes for these waves to reflect back after striking nearby objects. These measurements are compiled into a point cloud—a precise three-dimensional representation of the surrounding environment. Because LiDAR accurately and consistently captures the depth and geometry of objects, it is not susceptible to visual ambiguities or optical illusions. In this sense, LiDAR functions similarly to a bat: visually blind but highly attuned to the spatial structure of its surroundings.

This is an example of how LiDAR's point cloud looks like.

The issue with LiDAR is it's way more expensive than cameras and/or radars.

(Btw, did you know that you have LiDAR if you have an iPhone 12 Pro or newer? It's this thing that lets you do 3d scans of your surroundings and is responsible for outstanding autofocus)

The other car in Mark's video used Luminar technology. I first read about them for the first time in Wired in 2018, and now they provide a LiDAR for the ADAS system of Volvo's EX90.

The summary of all this is that Tesla has made a bet on a camera-based system that works well in good conditions, and others made a bet on a suite of cameras, radars, and LiDARs. We're yet to see what's the proper way - my bet is on the latter, at least for now.